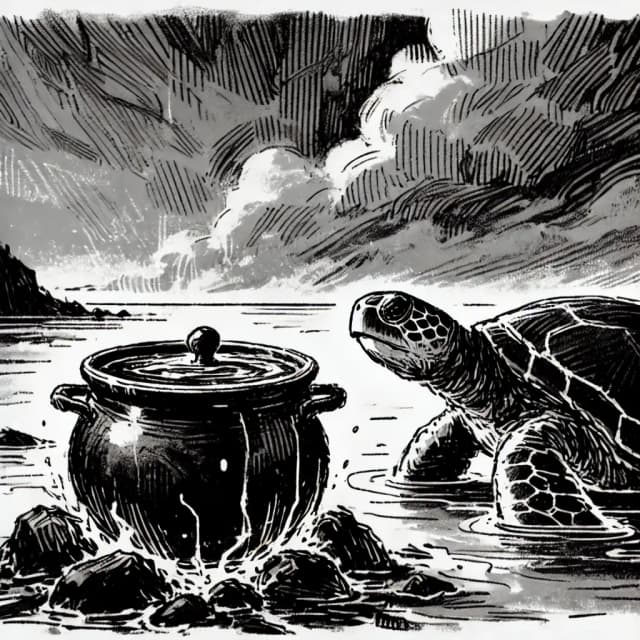

Turtle Benchmark

A crowdsourced, cheat-proof benchmark for evaluating LLM reasoning & understanding capabilities.

We first discovered in anonline lateral thinking puzzle game that as judges in the game, many LLMs are far inferior to humans in reasoning and accuracy of judgments on human questions. We hope that the Turtle benchmark can serve as a standardized test metric to evaluate LLMs' reasoning and understanding abilities, helping researchers and AI companies improve their models.